Last couple of weeks, I have read a lot of blog postings about

REST services in combination with the

Oracle Service Bus (like

this posting).

I've even made a first attempt to write a posting about this subject, but I got really constructive comments in return on that posting that I decided to write a complete new one. Thanks for the comments :) I decided to play around with REST and OSB myself and choosed for

JAX-RS (Jersey) as the Java technology to build my REST service and the brand new OSB 11g release for proxing this REST service. In this posting, I will show you how easy it is to build a REST/JSON service with the feature rich and highly flexible JAX-RS standard, deploy it to WebLogic 10.3.3 and proxy it with an OSB 11g service.

REST service in JAX-RS Required libraries (can be downloaded from

here):

- jersey-bundle-1.1.5.1.jar

- jsr113-api-1.1.jar

- asm-3.1.jar

I build in JDeveloper a very simple REST product service with a single method to find a product by its id. The REST method returns a JSON representation of the product.

First you have to create a new web application project in JDeveloper, because the JAX-RS product service is deployed as a web application to the WebLogic Server. In the web.xml file the following things has to be specified:

- the JAX-RS servlet which will handle all requests and forward them to the appropriate REST Service class.

- The context path to access the servlet

- The mime type application/json

The web.xml has the following content:

xsi:schemaLocation="http://java.sun.com/xml/ns/javaee http://java.sun.com/xml/ns/javaee/web-app_2_5.xsd"

version="2.5" xmlns="http://java.sun.com/xml/ns/javaee">

web.xml for

10

html

text/html

json

application/json

txt

text/plain

JAX-RS Servlet

jersey-servlet

com.sun.jersey.spi.container.servlet.ServletContainer

com.sun.jersey.config.property.resourceConfigClass

com.sun.jersey.api.core.PackagesResourceConfig

com.sun.jersey.config.property.packages

nl.oracle.com.fmw.rest;nl.oracle.com.fmw.rest.model

jersey-servlet

/*

Now the web.xml file is in place, two Java classes have to be implemented:

The ProductResource class will contain the method to find a product by its id:

package nl.oracle.com.fmw.rest;

import javax.ws.rs.GET;

import javax.ws.rs.Path;

import javax.ws.rs.PathParam;

import javax.ws.rs.Produces;

import nl.oracle.com.fmw.rest.model.Product;

@Path("/products")

public class ProductResource {

@GET

@Path("{id}")

@Produces("application/json")

public Product getProductById(@PathParam("id") int id){

//Return a simple new product with the provided id

return new Product(id, "DummyProduct");

}

}

The code listing above shows that that

getProductById method is only accessible through the HTTP GET method. Also the use of the @Path annotation on the class and method level makes it possible to set a specific

relative-uri with which the service can be accessed. The {id} serves as a placeholder for the product id and can be accessed through @PathParam annotation

The

Product class is shown in the following code listing and uses the

JAXB XmlRootElement binding annotation to automatically map the class structure to a JSON structure. Isn't that cool :). More JSON serialization and deserialization options in JAX-RS can be found

here

package nl.oracle.com.fmw.rest.model;

import javax.xml.bind.annotation.XmlRootElement;

@XmlRootElement

public class Product {

private int id;

private String name;

public Product(){

}

public Product(int id, String name) {

this.id=id;

this.name=name;

}

public void setId(int id) {

this.id = id;

}

public int getId() {

return id;

}

public void setName(String name) {

this.name = name;

}

public String getName() {

return name;

}

}

Coding is completed and it should be clear now that the use of annotations makes it very easy and flexible to create REST services with JAX-RS. Create a WAR deployment descriptor and make sure you add the jersey libraries to the WAR file and set the JEE web context root to

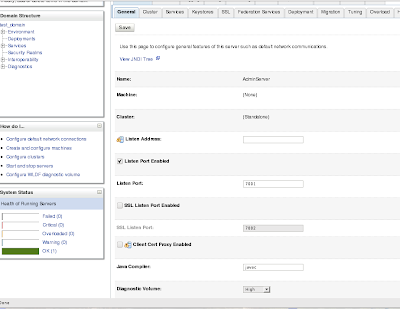

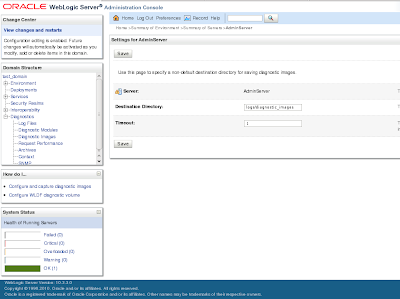

services. Finally, deploy the WAR file to the WebLogic 10.3.3 server. I target the application to the

osb_server1 managed server, which hosts my OSB 11g installation.

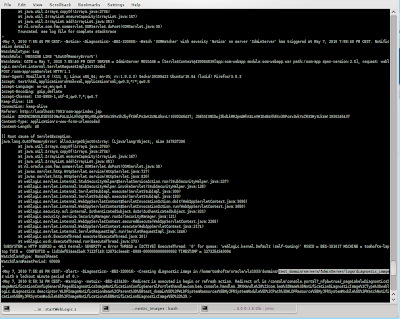

After deployment you can test the REST service using this URL where I use 1 as the id:

http://localhost:port/services/products/1OSB 11g proxyI used the brand new

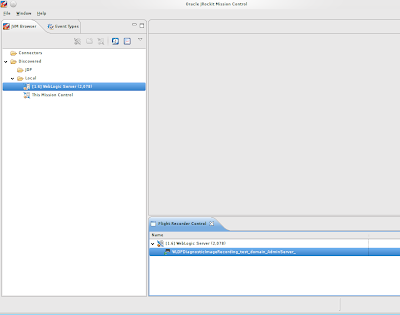

OSB 11g installation for creating a proxy service for my REST service.

The implementation is fairly simple and straight forward and follows more or less the same steps used in this excellent

posting:

Business ServiceThe business service invokes the REST product service. Make sure you use the messaging service type with the HTTP transport protocol. Also set the HTTP method to GET.

Proxy ServiceCreate a proxy service in Eclipse and use the messaging service type with the HTTP transport protocol. I used request type

none and Response type

text. In the message flow I added a routing action to invoke the business service.

I need to mention two important things about the my Proxy service implementation

- Set the endpoint /osb-services/products. This enables you to add anything to this context path, for example /{id}. This makes it also possible to use the OSB service as a proxy for different types of REST calls (make sure you can switch between HTTP methods in the message flow) to the same product service

- Use the transport request element from the $inbound variable to append the relative path after /osb-services/products to the REST service endpoint. I've used an insert operation for this:

Deploy the OSB service to the OSB 11g server and use the test console to test the service. Make sure you set the relative-uri attribute in the test console transport panel:

The $body element in the response message should look like this:

The OSB service just passes the JSON response forward to the client. It is fairly simple to convert the JSON output to XML and vice versa using

JSON lib in a Java service callout. How to do this is described in this

posting.

Also the JSON structure returned by the product service is very simple. In most cases you have to do more work with JAX-RS in order to construct the required JSON structure. The JAX-RS libraries contain options for

configuring JSON